The Four Horsemen of the AI Apocalypse

Sloprot, Futility-Sniping, Hazardlock, and Desynchronization: How AI Risks Undermine Culture, Motivation, Progress, & Alignment

…is just a catchy title ;)—but—

Big AI is coming for you.

Or so it would seem if you pay attention to the numerous voices that have been sounding alarm bells about AI Risk in recent years. While some of these voices have been outspoken about the issue for years, (see Yudkowsky’s HPMOR for a broad overview of existential risks, or writings on AI Risk from the lesswrong community), the cacophony of opinions on the topic has been ever-expanding since the debut of large language models like ChatGPT, which launched in November of 2023. Since then, the models have only gotten better, and the number of people who are worried feels like it has grown in lockstep with the models’ capabilities. Debates have ranged from questions about whether or not the models are edging towards consciousness to worries about whether they are going to (or should) kill us all when they become ‘powerful enough’.

A lot of conversations about AI risk and safety exist in this sort of abstract philosophical space—a long way from today in a galaxy far far away. While many of these questions are worth asking, my intuition is that AI is already causing serious problems in society. With that being said, this post will focus on something less apocalyptic, but arguably more urgent: the cultural and individual risks AI is introducing or exacerbating as it becomes more embedded in our everyday lives. These risks are here today in some form or another, and they matter. They may not kill us, but they can certainly cause our society (and our minds) to slowly decay.

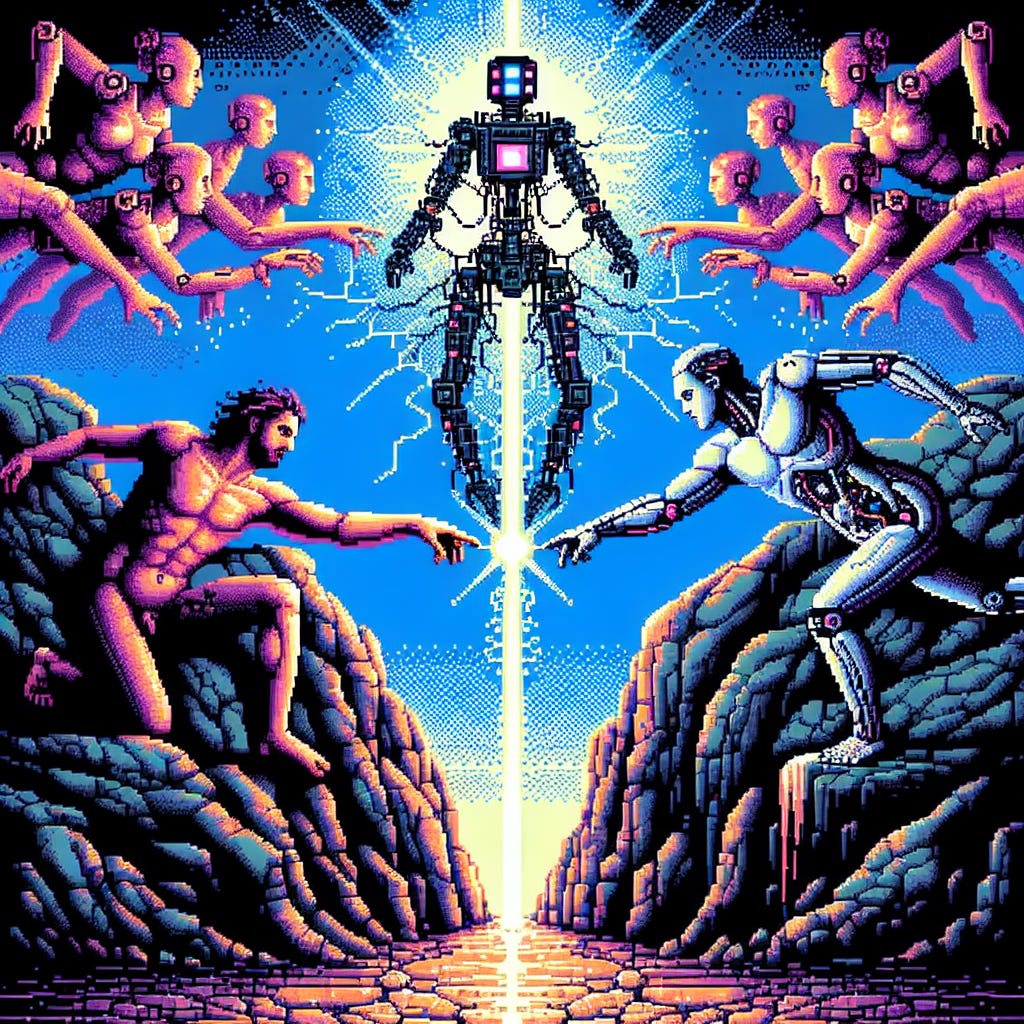

When existing terms don’t fully capture the ideas one is trying to express, new words may be helpful. I’ve come to refer to these risks as the Four Horsemen of the AI Apocalypse: Sloprot, Futility–Sniping, Hazardlock and Desynchronization. These are not existential risks. These risk are about erosion. Of trust. Of creativity. Of meaning, and of connection. If we don’t start thinking about them now—if I don't start thinking about them now—they could grow into the sort of crises you and I may regret not taking seriously sooner.

The First Horseman: Sloprot

AI makes it easier than ever to create text, images, music and (increasingly) videos. This seems like a good thing until you consider the reams of low-effort, derivative crap the internet (and the physical world) is already full of. The overproduction of this sort of content is the most immediate risk AI advancement or proliferation poses to society.

By sloprot, I mean the risk that AI accelerates the destruction of culture by filling up our feeds, screens and minds with so much “slop” that we can no longer distinguish what is valuable and interesting from what is designed to hijack our senses. Not all AI creations are automatically a form of slop—this is another point of contention worth discussing separately—but many of them are. I won’t try to define the boundaries of what is and is not slop in this essay, but to quote former Supreme Court Justice Potter Stewart ”I know it when I see it”.

Imagine a world where every TikTok trend is generated by a bot (or worse—an AI Agent), and every recommendation on your social media feed points to a post or video that was generated by AI. What happens to our attention spans when more content is created in a day than was created in a decade, a decade ago? The sheer volume of slop could rot our collective attention span, make it even harder to trust what we see online and leave us (and our future generations) with a culture that is defined by kitsch instead of creativity.

The deeper risk here is not that AI will make more low quality content (we are already doing a great job at that)—it’s that an excess of low quality content could destroy our collective sense of taste. When it's trivial to manufacture artificial solutions to satiate our natural desires, we run the risk of ‘gigafrying’ our reward systems. In other words, when instant gratification becomes the norm, we stop valuing the natural sources of gratification that might actually improve our well-being instead of consuming it.

My worry is that the people most vulnerable to misinformation and manipulation might become even more susceptible to slop-driven ‘mind viruses’ than before. Who needs to eat raspberries when they can have gummy-textured, raspberry-flavored slop? The long term consequence of sloprot is a cultural (or even spiritual) rot (to the extent that real life mimics the internet).

So how do we stop the rot now that the genie is out of the bottle? In short, the answer is discernment. As individuals, we have to develop the discernment to become active participants in our consumption habits. Being (or becoming) mindful of our information diets is more important now than it has ever been. It’s unhealthy to allow algorithmic feeds to dictate our interests to us when we are being attacked with Trojan horse after Trojan horse full of slop. We have to demand better of ourselves and search for originality, depth, intention and authenticity in the sea of content. Some artists and social trends seem to have picked up on this already. As a culture, we have to champion the value of human creativity for its own sake, lest we fall prey to the next risk: getting futility—sniped.

The Second Horseman: Futility—Sniping

Imagine you were on a basketball team in high school and Lebron James joined the team. Would you quit playing? If you answered yes, you might be at risk of getting futility—sniped. For some people, meeting someone that is better, smarter, faster or stronger than them is awe inspiring. For others, it triggers a deep sense of despair. A portmanteau of futility & nerd—sniping, futility—sniping is what happens when people look at the rapid progress of AI and decide that their own efforts are pointless. Why bother writing that novel, learning a new skill or mastering a craft if AI (or in some cases, a robot) can do it better? This is exactly the sort of pernicious mindset that we need to guard against as AI (and robotics) continue to advance and encroach on various domains of human ability.

Striving is a fundamental part of being human. From the ancient myths of Odysseus, Sisyphus, and Sundiata to the everyday struggles that go into building the lives we want for ourselves, we can see that having things to strive for—things worth struggling for—gives meaning to our existence. With nothing to struggle with or work towards, we might be overcome with a sense of ennui that could cause our agency and talents to decay over time. If we let AI convince us that our efforts are futile, we risk losing the will to create, to grow and to strive towards ever greater heights. Whereas sloprot threatens our taste, futility—sniping threatens our agency.

Going back to the basketball example, I can’t imagine that anyone becomes a worse player from having great players on their team. In that sense, I’d encourage people to think of AI as a tool rather than a competitor. It can help us grow faster and perform better, much like working with star players can help to improve your abilities in a given sport or profession. In the best case, AI can be a tool that helps you reach heights you couldn’t get to alone, and be a real difference maker on your ‘team’ so to speak. As the abilities of AI continue to improve, it's important that we retain the resilience, creativity and desire that make us human, and leverage those things along with our powerful tools (or teammates) to find new horizons worth reaching towards. Ultimately, we want to avoid having our agency eroded, without resisting the real benefits that these new tools can bring us.

The Third Horseman: Hazardlock

The mindset we approach these changes with is a huge determinant of what we’re going to get out of the future. While some anxieties around AI and the pace of technological development manifest as futility, others manifest as resistance. I don’t mean the healthy sort of resistance that essentially amounts to reifying one’s agency—I mean resistance that manifests as efforts to slow down or halt the pace of progress altogether. This is the mindset I refer to as being hazardlocked (or hazard—pilled—if you prefer). The hazardlocked see AI advancement as a runaway train headed for disaster, which compels them to try to stop the train at all costs. In this sense, they are in a state of (one-sided) deadlock against the continued progress or proliferation of artificial intelligence. The issue with this mindset is that stopping now doesn’t guarantee a safer outcome. While getting futility-sniped causes one to relinquish their agency, hazardlock is a misallocation (a waste) of it. It’s possible we could end up worse off if we just stop here, especially since there are so many problems that have yet to be solved in the AI models & systems that are already in use today.

This is not to say that AI advancement cannot be a source of potential hazards. Rather, the important thing to emphasize is that it is unlikely that the risk of encountering hazards grows linearly with the rate of technological progress. Imagine the relationship between technological progress and societal risk as an ‘n’ shaped curve: hazards may increase at first, but beyond a certain point, they begin to decline as society becomes better equipped to address them. Slowing progress might keep us stuck on the left side of the curve, prolonging the phase where risks are highest. In contrast, advancing technology mindfully could help us reach the other side faster. Once we’re on the other side of the curve, we should be able to leverage AI to mitigate risks rather than multiply them.

History offers many examples of similar dilemmas. Technological revolutions frequently are disruptive in the short term, despite providing transformative gains over the long term. Take the invention of the spinning jenny and the power loom in the late 18th century for example. Given that a single power loom could replace as many as 30 hand-operated looms, these innovations drastically reduced the demand for textile workers. The disruption this caused in the labor market led to riots and catalyzed the formation of the Luddite movement. These riots didn’t actually stop the adoption of these technologies, and their adoption would actually go on to create new jobs as they generated new demand for more widely available and lower cost textiles.

Blindly rushing towards some vision of progress isn’t the key to navigating the times we are in, but trying to stop the shift altogether is probably an ineffective way to go about making a positive impact. The issue with getting stuck in hazardlock is that it is a state of mind that is induced by short-term, first-order thinking. It’s tempting to think that halting progress will solve our problems, but doing so risks prolonging conflicts and delaying solutions. The cat is already out of the bag.

The alternative to halting progress is for us to thoughtfully navigate how we pursue progress in AI as a society. We need fewer people calling for us to stop AI development altogether, and more people thinking about how to balance tradeoffs between the benefits of innovation and the risks it may pose. Businesses should prioritize the long-term impacts of these technologies over short-term increases in shareholder value, and we ought to encourage our lawmakers to create policy frameworks that encourage innovation while minimizing societal harm. This means investing in AI safety research, promoting transparency in AI development, and doing what’s needed to make sure AI’s benefits are equitably distributed across society—among other measures, the identification of which is left as an exercise for the reader.

The Fourth Horseman: Desynchronization

The final risk I want to discuss is most similar to the classical risk I imagine many people are thinking of when they talk about AI. That is the risk of desynchronization. By desynchronization, I mean to encompass two kinds of misalignment: one is between AI and humans, the other is between groups of humans aided by AI. At one extreme this could manifest as misaligned AIs acting in adversarial ways that pose existential threats to humanity. At the other extreme it could lead to AIs becoming direct actors in geopolitical conflicts. But even if we exclude these large scale risks, small misalignments in more ‘mundane’ AI systems could create negative externalities that ripple through society.

A recent tweet from Sam Altman provides a good example of commonplace AI algorithms that are already exemplifying these risks. In it, he states that “algorithmic feeds are the first at-scale misaligned AIs”. This kind of subtle misalignment might seem trivial, but small nudges in the wrong direction can have a big impact over time. These feeds are designed to maximize engagement, and often prioritize outcomes that harm humans by amplifying division, promoting misinformation, and driving compulsive behavior. If we accept that tiny misalignments will have outsized impacts on our society over time, we ought to prioritize understanding how and why AI models work the way they do, and be thoughtful about where we want them to intervene in our lives.

Even on an individual level, it can be difficult to imagine what a perfectly aligned AI looks or acts like. I’m skeptical of the notion that on an individual level, alignment simply means that AI does our bidding and functions as a pure extension of our will. That viewpoint doesn’t take into consideration that sometimes our will isn’t what's best for society—or for ourselves. I’m equally skeptical of AI that decides what is right and wrong for me. I don’t want an AI nanny monitoring what I do, reporting me to some authority when I do things it doesn’t like or stopping me from doing what I want to do. Don’t tread on me. An AI embodying pure superego could be just as harmful as one that embodies pure id.

Perhaps it's helpful to identify some small examples of AI that are already practically aligned (or misaligned) so that we can think more clearly about what we should aim for on a grander scale. I think autocomplete and spellcheck are great examples. These tools are both intended to help us modify our writing so that it can be better understood by others. One the one hand, spellcheck helps us to communicate clearly without deciding what we must say. On the other hand, autocomplete decides what we ought to say. One might argue that spellcheck is practically aligned, whereas autocomplete may be practically misaligned.

While spellcheck might make us a little worse at grammar, it empowers us to communicate more effectively—aligning our intentions with society’s standards for language, without telling us what to say or how to think. Maybe alignment on an individual level looks something like that: models that help us increase our impact and connect with others more effectively without shifting or diluting our intentions.

Scaling this idea up, a well-aligned AI might not blindly follow orders or enforce rigid rules. It would be neither spy nor slave. Instead, it could function like a translator or editor, helping us to refine our ideas and communicate clearly so that we can focus on the outcomes we aim for. Designing AI that acts like a partner rather than a servant—or spy—might yield a more robust synergy between human creativity and machine intelligence.

Although most of us won’t have a say in how AI is deployed at the level of governments or corporations, we can still be thoughtful about the role it plays in our daily lives. Asking ourselves what alignment looks like on a personal level—especially in terms of where, when and how we want AI to intervene in our lives—can help us make better choices about the tools we use. Small shifts in our habits and thoughts could produce a much better future for us on both a micro and macro scale. Though it may not be possible for us to constantly be in sync with our AI systems—or each other for that matter, that doesn’t mean it isn’t worth trying.

Towards a Future Worth Living In

These Four Horsemen—Sloprot, Futility–Sniping, Hazardlock, and Desynchronization—are not things we should start worrying about in some distant future. They’re already impacting society today, and we need to defend against them as we continue to expand the scope, scale, and speed of AI development. If we don’t pay attention to them, we could lose a lot of things that matter deeply to us: our sense of taste, our sense of agency, our rate of progress and our ability to coordinate with one another.

It’s worth emphasizing that we have to guard against all four, perhaps in sequence. Even if we avoid having our taste corrupted by sloprot, we still have to ensure our agency doesn’t get futility—sniped. It’s equally important that our agency doesn’t get stuck in hazardlock or it will be spent on something that is likely counterproductive. Assuming we manage to go on with our taste and agency in tact, we might be able to ask better questions and propose novel solutions to make sure that neither humans-and-ai, nor humans-with-ai become too desynchronized to coordinate with one another and plan a worthwhile future together.

If we sleepwalk through the AI revolution, there's no telling where we’ll end up as a culture when we get to the other side. But it's not too late for us to take decisive action to prevent the worst outcomes. We can choose to be more discerning about the content we consume. We can choose to persevere in our efforts to acquire and master new skills. We can choose thoughtful progress over blind resistance or blind acceleration. And we can choose to build AI systems—and societies—that favor cooperation over conflict. The trick is in “staying with the trouble”. By remaining vigilant and accountable for the future we’re creating, we can ensure these Horsemen remain cautionary tales, rather than prophecies waiting to unfold.